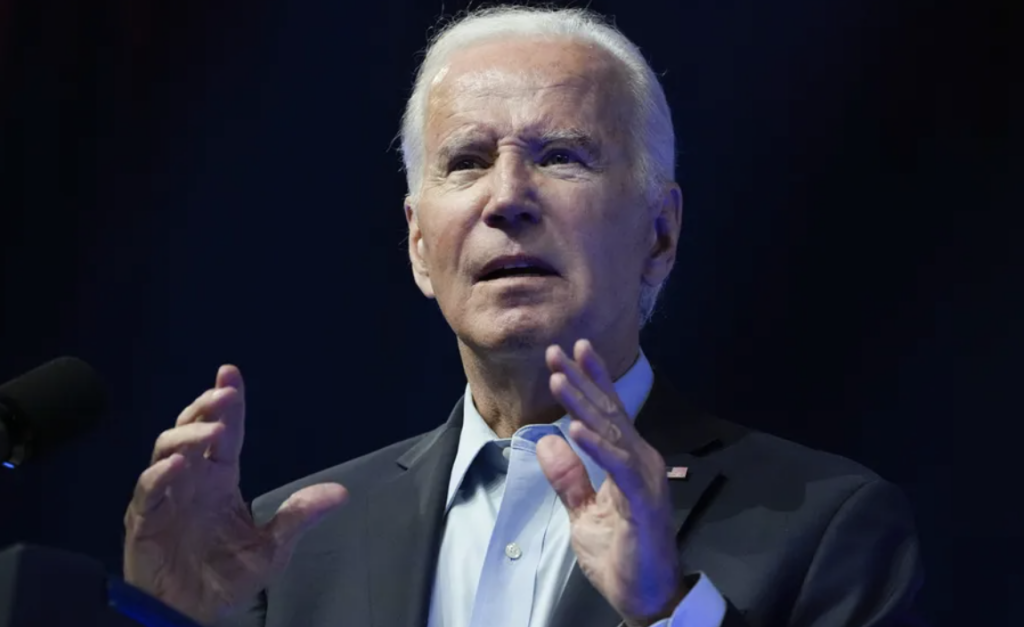

The Reason Why Healthcare is not Considered a Right in the United States

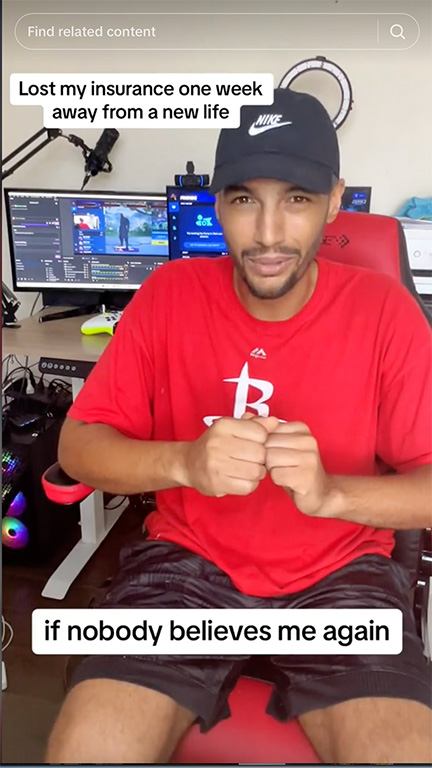

@bethechangeyoudesire Understanding the undeniable influence of systemic racism in shaping American society is crucial; when people assert that most aspects of our nation can be traced back to racism, it's because the truth lies in history. To move forward, we must learn from the past, acknowledging that systemic racism has played a significant role in […]

The Reason Why Healthcare is not Considered a Right in the United States Read More »